Home

About

Links

Home

About

Links

Robot Arm : Phase 1

Posted 12/06/2008 by Emil Valkov

Contact:

Contact:

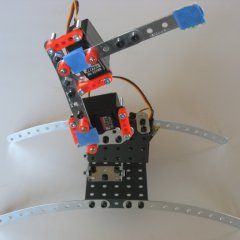

This robot arm is very simplistic, is uses only 2 servos, one for the “shoulder” and one for the “elbow”. Its main purpose is to experiment with ways to control it.

Here's a short video showing it in action.

Main direction

The goal for the software is to guide the arm's end-point to a location we select arbitrarily.

The main idea is for the robot to continually look at his own arm, and make decisions on how to move it to closer to its goal, until it ultimately achieves it.

This allows for greater flexibility because you don't need the software to contain a precise mathematical model of your robot's structure prior to running it.

Instead it can learn about itself, and infer this structure on the fly.

In a sense this can be compared to babies who also learn how their arms work from the moment they're born (and maybe even before).

Implementation

I control this robot arm with a Phidgets PhidgetAdvancedServo 8-Motor controller which is pretty nice as it directly connects to my laptop via USB.

No need for any serial adapters.

They provide libraries to connect to their controllers using most popular languages. I chose their C library for this project.

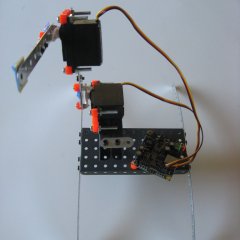

I use 2 Hitec HS-322HD servos (which came with the Phidgets controller), and parts from a regular Erector set.

Fastening the servos to the erector set parts was a bit of a challenge, since they're not really meant to be compatible.

You can notice 3 blue squares: shoulder, elbow, and end-point.

RoboRealm is used to locate their position and track the position of the arm.

For every frame it recieves, it determines the coordinates for the 3 blue squares, and moves the servos so that the end-point gets closer to its goal.

Precision is not critical here because any errors will be corrected as the process is repeated many times.

The process is stopped once we get close enough to our goal.

Going forward

The camera is placed in front of the arm, so the path the end-point follows is circular (when a single motor moves).

If we were to place the camera at an angle, the path would become elliptical. The algorithm can still work, but only as long as the direction is not too far off, and the algorithms' self correcting capacity can compensate for the error.

However the greater the angle, the greater those errors would be, and the more the limitations of the algorithm will be visible.

We need an algorithm that doesn't make the assumption the path is circular, that instead learns about the actual movement on the fly, while it's running.

Strictly speaking all 3 blue squares are not required. We can infer the position of the shoulder and elbow by studying the movement of the end-point. In the case if circular movement it's possible to compute the center of the circle of motion.

It would greatly simplify tracking if we only had 1 blue square to track instead of three.

That's it for now. Stay tuned for phase 2...